Difference Between Mathematics And Statistics Pdf

Statistics has a sort of funny and peculiar relationship with mathematics. In a lot of university departments, they're lumped together and you have a Department of Mathematics and Statistics". Other times, it's grouped as a branch in applied math. Pure mathematicians tend to either think of it as an , or dislike it because it's " not rigorous enough".

After having studied both, I feel it's misleading to say that statistics is a branch of math . Rather, statistics is a separate discipline that uses math, but differs in fundamental ways from other branches of math, like combinatorics or differential equations or group theory. Statistics is the study of uncertainty, and this uncertainty permeates the subject so much that mathematics and statistics are fundamentally different modes of thinking .

Above: if pure math and statistics were like games

Definitions and Proofs

Math always follows a consistent definition-theorem-proof structure. No matter what branch of mathematics you're studying, whether it be algebraic number theory or real analysis, the structure of a mathematical argument is more or less the same.

You begin by defining some object, let's say a wug . After defining it, everybody can look at the definition and agree on which objects are wugs and which objects are not wugs.

Next, you proceed to prove interesting things about wugs, using marvelous arguments like proof by contradiction and induction. At every step of the proof, the reader can verify that indeed, this step follows logically from the definitions. After several of these proofs, you now understand a lot of properties of wugs and how they connect to other objects in the mathematical universe, and everyone is happy.

In statistics, it's common to define things with intuition and examples, so " you know it when you see it" ; things are rarely so black-and-white like in mathematics. This is born out of necessity: statisticians work with real data, which tends to be messy and doesn't lend itself easily to clean, rigorous definitions.

Take for example the concept of an " outlier". Many statistical methods behave badly when the data contains outliers, so it's a common practice to identify outliers and remove them. But what exactly constitutes an outlier? Well, that depends on many criteria, like how many data points you have, how far it is from the rest of the points, and what kind of model you're fitting.

In the above plot, two points are potentially outliers. Should you remove them, or keep them, or maybe remove one of them? There's no correct answer, and you have to use your judgment.

For another example, consider p-values. Usually, when you get a p-value under 0.05, it can be considered statistically significant. But this value is merely a guideline, not a law – – it's not like 0.048 is definitely significant and 0.051 is not.

Now let's say you run an A/B-test and find that changing a button to blue results in higher clicks, with p-value of 0.059. Should you recommend to your boss that they make the change? What if you get 0.072, or 0.105? At what point does it become not significant? There is no correct answer, you have to use your judgment.

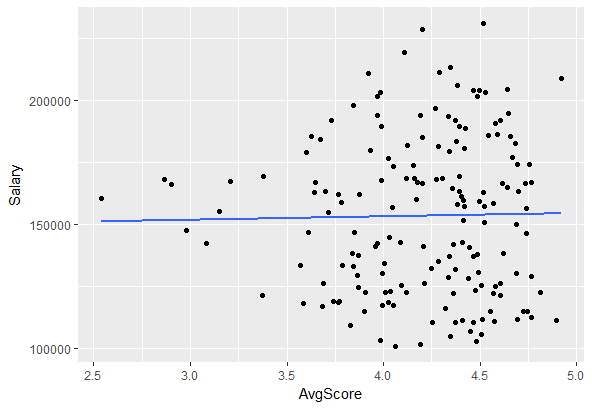

Take another example: heteroscedasticity. This is a fancy word that means the variance is unequal for different parts of your dataset. Heteroscedasticity is bad because a lot of models assume that the variance is constant, and if this assumption is violated then you'll get wrong results, so you need to use a different model.

Is this data heteroscedastic, or does it only look like the variance is uneven because there are so few points to the left of 3.5? Is the problem serious enough that fitting a linear model is invalid? There's no correct answer, you have to use your judgment.

Another example: consider a linear regression model with two variables. When you plot the points on a graph, you should expect the points to roughly lie on a straight line. Not exactly on a line, of course, just roughly linear. But what if you get this:

There is some evidence of non-linearity, but how much " bendiness" can you accept before the data is definitely not " roughly linear" and you have to use a different model? Again, there's no correct answer, and you have to use your judgment.

I think you see the pattern here. In both math and statistics, you have models that only work if certain assumptions are satisfied. However, unlike math, there is no universal procedure that can tell you whether your data satisfies these assumptions.

Here are some common things that statistical models assume:

- A random variable is drawn from a normal ( Gaussian) distribution

- Two random variables are independent

- Two random variables satisfy a linear relationship

- Variance is constant

Your data is not going to exactly fit a normal distribution, so all of these are approximations. A common saying in statistics goes: " all models are wrong, but some are useful".

On the other hand, if your data deviates significantly from your model assumptions, then the model breaks down and you get garbage results. There's no universal black-and-white procedure to decide if your data is normally distributed, so at some point you have to step in and apply your judgment.

Aside: in this article I'm ignoring Mathematical Statistics, which is the part of statistics that tries to justify statistical methods using rigorous math. Mathematical Statistics follows the definition-theorem-proof pattern and is very much like any other branch of math. Any proofs you see in a stats course likely belongs in this category.

Classical vs Statistical Algorithms

You might be wondering: without rigorous definitions and proofs, how do you be sure anything you're doing is correct? Indeed, non-statistical ( mathematical) and statistical methods have different ways of judging " correctness".

Non-statistical methods use theory to justify their correctness. For instance, we can prove by induction that Dijkstra's algorithm always returns the shortest path in a graph, or that quicksort always arranges an array in sorted order. To compare running time, we use Big-O notation, a mathematical construct that formalizes runtimes of programs by looking at how they behave as their inputs get infinitely large.

Non-statistical algorithms focus primarily on worst-case analysis , even for approximation and randomized algorithms. The best known approximation algorithm for the Traveling Salesman problem has an approximation ratio of 1.5 – – this means that even for the worst possible input, the algorithm gives a path that's no more than 1.5 times longer than the optimal solution. It doesn't make a difference if the algorithm performs a lot better than 1.5 for most practical inputs, because it's always the worst case that we care about.

A statistical method is good if it can make inferences and predictions on real-world data. Broadly speaking, there are two main goals of statistics. The first is statistical inference : analyzing the data to understand the processes that gave rise to it; the second is prediction : using patterns from past data to predict the future. Therefore, data is crucial when evaluating two different statistical algorithms. No amount of theory will tell you whether a support vector machine is better than a decision tree classifier – – the only way to find out is by running both on your data and seeing which one gives more accurate predictions.

Above: the winning neural network architecture for ImageNet Challenge 2012. Currently, t

Above: the winning neural network architecture for ImageNet Challenge 2012. Currently, t

In machine learning, there is still theory that tries to formally describe how statistical models behave, but it's far removed from practice. Consider, for instance, the concepts of VC dimension and PAC learnability. Basically, the theory gives conditions under which the model eventually converges to the best one as you give it more and more data, but is not concerned with how much data you need to achieve a desired accuracy rate.

This approach is highly theoretical and impractical for deciding which model works best for a particular dataset. Theory falls especially short in deep learning, where . Even with models that are theoretically well-understood, the theory can only serve as a guideline; you still need cross-validation to determine the best hyperparameters.

Modelling the Real World

Both mathematics and statistics are tools we use to model and understand the world, but they do so in very different ways. Math creates an idealized model of reality where everything is clear and deterministic; statistics accepts that all knowledge is uncertain and tries to make sense of the data in spite of all the randomness. As for which approach is better – – both approaches have their advantages and disadvantages.

Math is good for modelling domains where the rules are logical and can be expressed with equations. One example of this is physical processes: just a small set of rules is remarkably good for predicting what happens in the real world. Moreover, once we've figured out the mathematical laws that govern a system, they are infinitely generalizable — Newton's laws can accurately predict the motion of celestial bodies even if we've only observed apples falling from trees. On the other hand, math is awkward at dealing with error and uncertainty. Mathematicians create an ideal version of reality, and hope that it's close enough to the real thing.

Statistics shines when the rules of the game are uncertain. Rather than ignoring error, statistics embraces uncertainty. Every value has a confidence interval where you can expect it to be right about 95% of the time, but we can never be 100% sure about anything. But given enough data, the right model will separate the signal from the noise. This makes statistics a powerful tool when there are many unknown confounding factors, like modelling sociological phenomena or anything involving human decisions.

The downside is that statistics only works on the sample space where you have data; most models are bad at extrapolating past the range of data that it's trained on. In other words, if we use a regression model with data of apples falling from trees, it will eventually be pretty good at predicting other apples falling from trees, but it won't be able to predict the path of the moon. Thus, math enables us to understand the system at a deeper, more fundamental level than statistics.

Difference Between Mathematics And Statistics Pdf

Source: https://luckytoilet.wordpress.com/2017/09/06/whats-the-difference-between-mathematics-and-statistics/

Posted by: martineztrallese.blogspot.com

0 Response to "Difference Between Mathematics And Statistics Pdf"

Post a Comment